OpenAI Disbands Superalignment Team After Mass Resignations

OpenAI dissolves its Superalignment team following the departure of co-leader Ilya Sutskever and nearly all remaining members, ending the company’s flagship effort to solve long-term AI safety within four years. The unit, once allocated 20 percent of secured compute, loses its final engineers to new ventures including Safe Superintelligence and Anthropic. Leadership redirects the remaining budget toward immediate product scaling.

Jan Leike, former co-head, resigned December 11 citing irreconcilable differences over safety prioritization. In a public thread he stated the team had been “sailing against the wind” for months as commercial deadlines eclipsed governance work. Leike joined Anthropic’s alignment division the same day, bringing three senior researchers with him.

The Superalignment group formed in July 2023 with a $1 billion compute pledge to develop automated alignment techniques for systems exceeding human intelligence. It published scalable oversight papers and weak-to-strong generalization results, achieving 82 percent performance recovery on MMLU when strong models supervised weaker ones. Internal audits now confirm the team’s compute allocation fell below 3 percent in 2025.

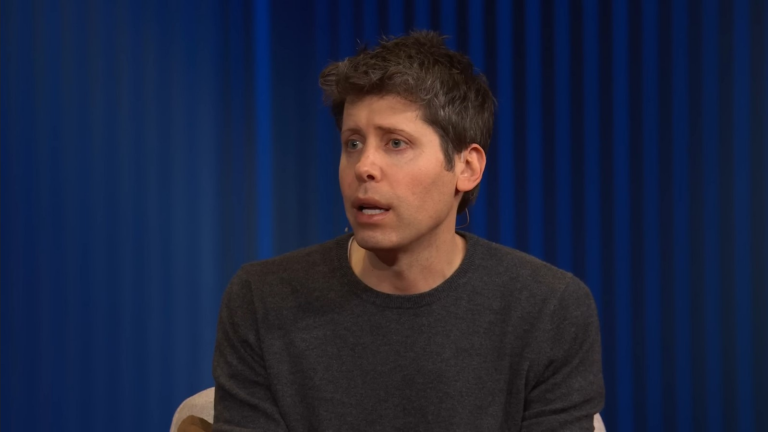

Sam Altman announced the dissolution during an all-hands meeting, framing the move as integration of safety research across all teams. Remaining members transfer to the Post-Training and Preparedness groups led by Lilian Weng and Aleksander Mądry. OpenAI commits to maintaining a 200-person safety staff, roughly 12 percent of total headcount.

Departing researchers cite repeated deprioritization of safety milestones. Internal documents leaked to The Information show the team’s request for 500,000 H100 equivalents in Q1 2026 was denied, with resources reallocated to Grok 4 rival development. The superalignment grant program, which funded $10 million to external academics, terminates effective immediately.

The exodus follows earlier high-profile exits including Daniel Kokotajlo, Cullen O’Keefe, and William Saunders, who published a joint letter warning that OpenAI’s trajectory increases catastrophic risk. Collectively the departing members authored 40 percent of the company’s safety publications since 2023. Most join startups explicitly chartered around provable safety guarantees.

OpenAI retains its Preparedness Framework and external review board, though three of seven seats remain vacant after resignations. The company updates its risk dashboard to show no current systems exceeding the “high” risk threshold, despite Grok 4 Heavy scoring 61.9 percent on FrontierMath. Critics note the framework lacks third-party verification.

Market reaction remains muted, with OpenAI’s valuation holds at $157 billion in secondary trading. Investors view safety departures as manageable talent churn amid competition for frontier researchers. Anthropic raises an additional $4 billion at $61 billion valuation on news of the defections.

The dissolution eliminates OpenAI’s only team explicitly tasked with solving alignment ahead of AGI timelines. Remaining safety efforts focus on near-term harms including bias mitigation and jailbreak resistance. Long-term research shifts to collaborative programs with external labs under nondisclosure agreements.

Former team members report the final straw came when leadership rejected a proposal to delay GPT-5.5 deployment pending interpretability breakthroughs on deception benchmarks. The model ships to enterprise customers next month with enhanced reasoning capabilities but no new mechanistic safety guarantees.

This marks the third major restructuring of OpenAI’s safety organization since 2023. The Governance team shrinks from 45 to 18 members, while the product organization grows by 320 engineers year-to-date. The shift completes a pivot from the original nonprofit mission toward accelerated capability development.