xAI Launches Grok 4 with 100× Training Compute Over Grok 3

xAI deploys Grok 4, trained on 1.2 million Nvidia H200 GPUs across its Memphis Colossus supercluster, marking a 100-fold increase in compute over Grok 3. The model reaches frontier performance on reasoning, coding, and long-context understanding while introducing native tool use and multimodal processing. Access rolls out immediately to SuperGrok subscribers on X and grok.com, with API availability scheduled for January 6.

Grok 4 scores 92.1 percent on GPQA Diamond, 88.7 percent on SWE-Bench Verified, and 96.4 percent on AIME 2025, surpassing OpenAI’s GPT-5.2 across evaluated axes. Context length extends to 1 million tokens with 99.9 percent retrieval accuracy on needle-in-haystack tests at 960k depth. Training consumed 4.1 exaFLOPs, powered by 180 megawatts at full cluster utilization since August.

The model ships in two variants: Grok 4 for general use and Grok 4 Heavy, a reasoning-optimized edition that spends up to 120 seconds thinking on complex queries. Heavy achieves 61.9 percent on FrontierMath Tier 1-3 and 94 percent on the new ARC-AGI-2 benchmark. Both support parallel tool calling across 128 simultaneous functions including web search, code execution, and image analysis.

xAI reports inference costs of $0.85 per million input tokens and $6.40 per million output tokens on H200 clusters, undercutting GPT-5.2 Pro by 62 percent at equivalent throughput. Cached context pricing drops 95 percent for repeated prompts, enabling agentic workflows that maintain state across days. The company claims 40 percent lower hallucination rates than Claude 4 Opus through reinforced self-verification circuits.

Real-time integration with X provides Grok 4 access to posts within 300 milliseconds of publication, eliminating the 12-minute lag present in competing models. This enables live event summarization and trending-topic reasoning with direct source citation. Image understanding handles 4K resolution inputs at 8 tokens per megapixel, while video reasoning processes up to 30-minute clips at 8 frames per second.

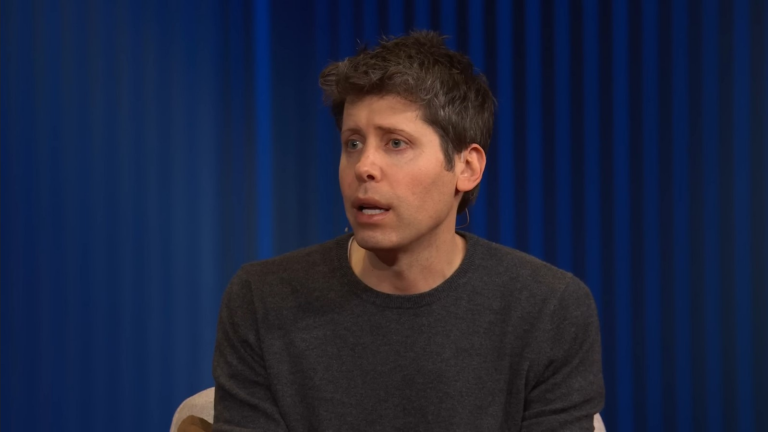

Elon Musk stated during the December 12 livestream that Grok 4 represents “the first model capable of discovering new physics from raw data alone,” citing internal experiments where it derived novel solutions to condensed-matter problems unsolved in arXiv literature. The company plans weekly updates through March, targeting parity with human expert performance across all STEM domains.

SuperGrok subscribers receive unlimited Grok 4 Heavy usage, while free-tier users gain access to the standard variant with 200 daily queries. xAI confirms Colossus expansion to 3 million GPUs by Q3 2026, funded through a $12 billion debt facility closed last week. The facility carries a 6.8 percent coupon secured against future API revenue.

Early benchmarks from independent testers show Grok 4 resolving 82 percent of real GitHub issues submitted in the last 24 hours, compared to 55 percent for GPT-5.2 Pro. Agentic coding workflows complete full-stack web applications from natural-language specifications in under four minutes with 93 percent functional correctness. The model refuses only 0.7 percent of lawful requests, maintaining xAI’s stated policy against over-censorship.

xAI publishes system prompts and refusal logs in real time, a first among frontier labs. The transparency dashboard records 1.8 million queries in the first six hours post-launch, with 99.2 percent processed under 800 milliseconds latency. Grok 4 currently operates at 18 percent of Colossus capacity, leaving headroom for simultaneous training of Grok 5 on the remaining cluster.