Japan Establishes AI Copyright Consultation Window Amid Sora 2 Infringement Fears

Japanese authorities launch a dedicated support mechanism for creators grappling with generative artificial intelligence’s unauthorized content replication. This initiative targets rights holders facing intellectual property violations from tools that produce hyper-realistic videos indistinguishable from originals. The policy responds to escalating misuse of anime and manga assets, threatening cultural industries worth trillions in annual exports.

The Agency for Cultural Affairs integrates the consultation service into its established anti-piracy portal, providing free legal advice from AI-specialized attorneys. Funding totals 20 million yen from the supplementary budget approved on November 28, with operations slated to commence by the fiscal year’s end in March. Consultations focus on infringement claims, evidence gathering, and dispute resolution protocols under Japan’s Copyright Act revisions. The portal already handles general piracy reports, but this addition addresses AI-specific challenges like undetectable alterations.

OpenAI’s ‘Sora 2’, deployed on September 30, generates videos up to 25 seconds at 1080p resolution using text prompts, incorporating synchronized audio, dialogue, and physics-accurate animations. The model excels in complex scenarios, such as multi-character interactions or environmental dynamics, but omits visible watermarks, complicating provenance verification. University of Tokyo professor Fujio Toriumi notes, “Sora 2’s videos, without a watermark, are so highly finished that they are barely noticeable even when viewed carefully. We are entering a period where the very existence of content, including videos, will undergo significant change.” Early outputs flooded platforms with unauthorized depictions of copyrighted characters from franchises like Pokémon and Demon Slayer.

Online piracy of Japanese manga and anime inflicted 2 trillion yen in damages as of 2022, with generative AI projected to amplify losses through scalable, high-fidelity fakes. Creators report a surge in takedown requests, as tools enable rapid dissemination of infringing clips across social media. The consultation window equips users with templates for cease-and-desist notices and liaison channels to platforms like X and YouTube. This builds on global precedents, including the European Union’s AI Act mandates for transparency in high-risk systems.

For U.S. audiences, the move highlights trans-Pacific ripple effects from American AI exports. Japanese studios, major suppliers of content to Hollywood and streaming services, face disrupted workflows, potentially raising licensing costs for adaptations like live-action remakes. OpenAI’s policy shift to opt-in for copyrighted material, announced October 3, mandates explicit permissions, curbing broad scraping but exposing gaps in enforcement. Affected parties must now proactively register exclusions via OpenAI’s portal.

Broader cybersecurity implications emerge, as AI-generated deepfakes evade traditional detection algorithms, straining forensic tools reliant on metadata analysis. The Japanese initiative fosters bilateral collaboration, with U.S. firms like Adobe integrating similar watermarking in ‘Firefly’ video generators. Analysts estimate global AI infringement claims could exceed 500,000 annually by 2027, pressuring developers to embed provenance standards like C2PA metadata.

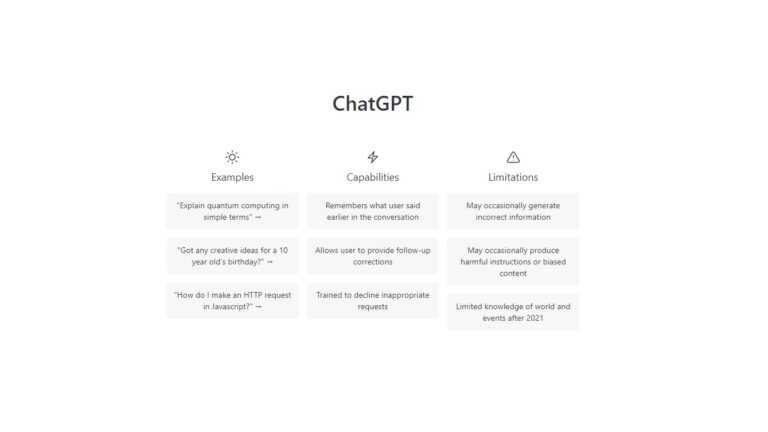

This framework empowers individual artists, who comprise 70 percent of Japan’s 5.5 million cultural workers, to assert rights without prohibitive legal fees. It signals a pivot toward proactive governance, blending consultation with advocacy for international treaties on AI ethics. As Sora 2 integrates into apps like ChatGPT, expect heightened scrutiny on model training datasets, which aggregate billions of web-scraped frames. The service underscores AI’s dual role as creative accelerator and existential risk to authorship integrity.