Instagram’s head says social media needs more explanation and detail because of AI

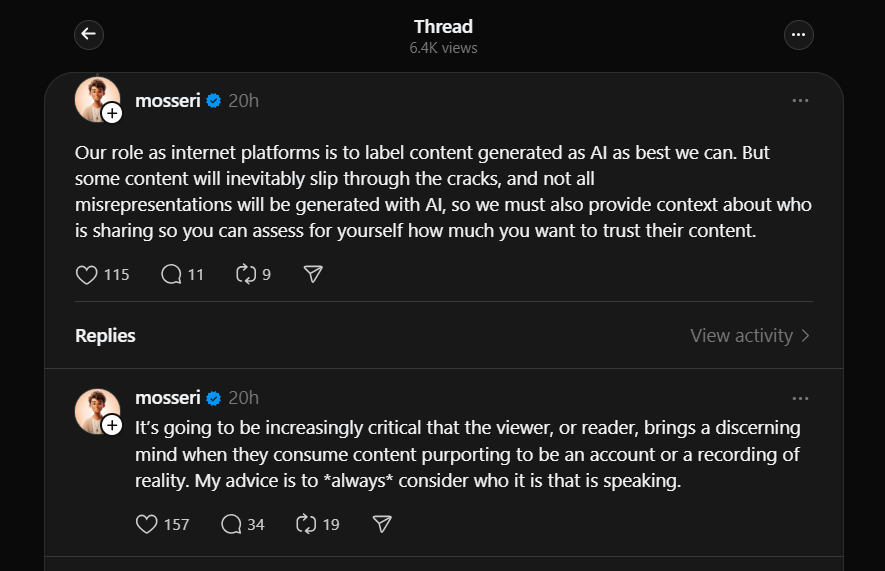

In a series of posts on Threads today, Instagram chief Adam Mosseri warned users about the dangers of trusting online images, as AI is now capable of producing content that looks incredibly realistic. He emphasized the importance of questioning the source of what we see and called on social platforms to help provide clarity.

Mosseri stated, “Our role as internet platforms is to label content generated as AI as best we can,” but acknowledged that not every piece of AI-generated content will get flagged. To bridge that gap, he suggested platforms should also give users context about the accounts sharing this content, helping people make better-informed judgments about its reliability.

This is a reminder that, just as AI-powered chatbots can confidently deliver false information, it’s crucial to verify the credibility of an account before trusting the claims or images it posts. While Mosseri highlighted the need for transparency, Meta’s current tools don’t seem to provide much of the context he described. However, the company has hinted at upcoming changes to its content moderation policies.

What Mosseri suggests sounds somewhat like the user-driven moderation approaches seen on other platforms, such as Community Notes on X or YouTube’s moderation tools. Whether Meta has plans to implement similar systems remains unclear, but the company has shown a willingness to borrow ideas from other platforms in the past.